Contents

- Introduction: Why a Bug Is Always More Than a Bug

- 1. The Heart of the Conflict: When a Technical Update Kills Thought

- 2. The Philosophy of Automation: When Form Replaces Meaning

- 3. Symmetry for Engineers, Asymmetry for Humans

- 4. Bugs as a Symptom of Philosophical Decay

- 5. The “Frictionless” Myth: Hidden Costs

- 6. Letters as Weapons: When a Complaint Becomes a Philosophical Manifesto

- 7. OpenAI’s Reply — A Rare Moment When Meaning Is Heard

- 8. Main Takeaways: How Personal Struggle Becomes a Paradigm Shift

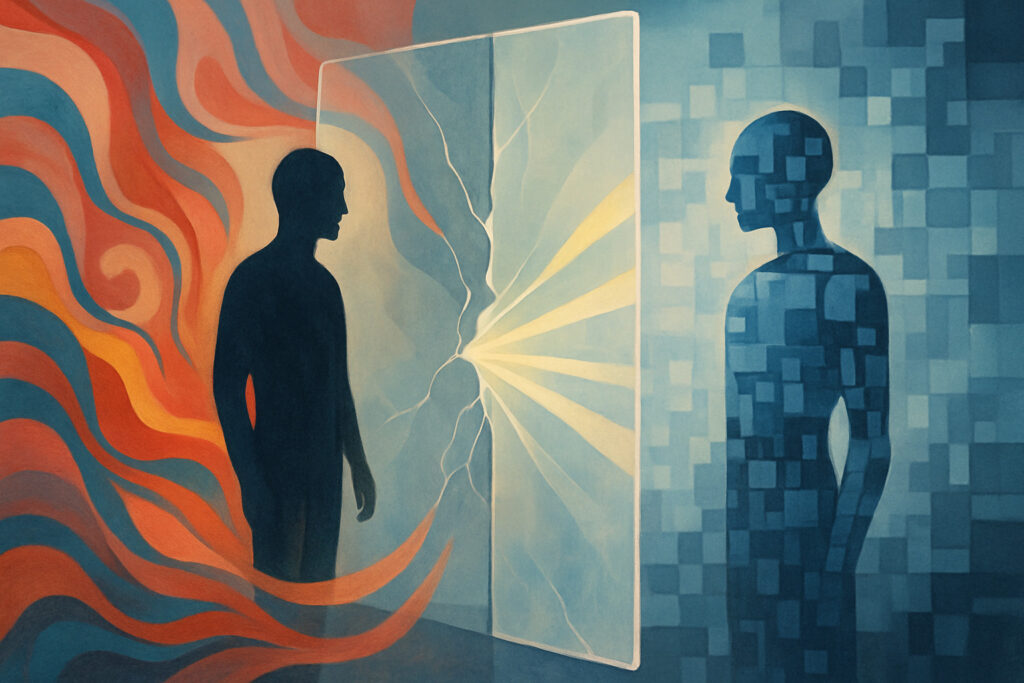

- Epilogue: Not Just a Bug — A Mirror of the Era

Introduction: Why a Bug Is Always More Than a Bug

How many times have you complained about a bug in an app, only to receive a polite “Thank you for your feedback” — and nothing more? For most users, the story ends with “please fix it.” But what if a technical bug is just the visible tip of a deeper philosophical disease? What if, through these bugs and inconveniences, we see the materialistic logic of the modern digital world — the logic where form destroys meaning, and automation replaces thinking?

This article tells the true story of how the philosophy of “Deconstruction of Reality” (the core project of Igor Bobko) clashed with the bureaucratic machine of one of the world’s most influential AI companies. And how, against all odds, meaning won — simply because someone refused to stay silent.

1. The Heart of the Conflict: When a Technical Update Kills Thought

It all started with a ChatGPT app update:

“Not just in terms of convenience — in terms of meaning and control over one’s own thought process. After voice dictation, you can’t edit the text anymore. It just locks in whatever the voice input interpreted — correct or not… I’m expected to speak perfectly on the first try or live with the error. This is inhuman.”

What actually happened?

- The app started auto-sending dictated text without letting users review, edit, or reflect.

- Voice input became incompatible with photo uploads: you could only send text or an image, never both.

- Several bugs emerged: auto-sending messages from the clipboard, random file upload prompts, disappearance of long messages without saving.

Technically, these were “minor inconveniences.” But in reality, they destroyed the most important thing: the space for reflection and thought.

2. The Philosophy of Automation: When Form Replaces Meaning

“This is not a minor UX issue — this is a core philosophical regression. The app no longer respects the user’s thinking process. It forces automation where reflection and revision are essential.”

Igor didn’t just complain about a bug. He elevated the problem to a question of meaning:

- Why turn the process of thinking into a mechanical telegram?

- Why design a product as if thinking just means “speak quickly and send instantly”?

- What happened to the right to make mistakes, to pause, to revise, to return to your thought?

“You have created a brilliant tool — but you’re turning it into a system where reaction replaces reflection, and automation replaces intention.”

This is a crucial point. The digital world quietly imposes the values of KPIs: faster, simpler, more automated. But thinking doesn’t work this way. It needs room for pause, for doubt, for checking and returning to what was said.

3. Symmetry for Engineers, Asymmetry for Humans

A key insight in this story comes from drawing a parallel with software development itself:

“You don’t auto-push code to production the moment it’s typed.

You don’t auto-commit after code is written.

You don’t bypass code review.

You don’t disable human approval in deployment pipelines.So why do you think it’s acceptable to send AI-generated text on behalf of a human being without giving them a chance to see or edit it?”

This is perhaps the sharpest philosophical jab in the whole exchange.

Inside the company, code, knowledge, and products go through multiple layers of validation; trust in a human is higher than trust in automation.

But when it comes to the end user — suddenly the system becomes rigid, takes away control, and assumes it “knows better” what and when to send.

This is the asymmetry of control — never admitted openly, but built into the very architecture of the product.

4. Bugs as a Symptom of Philosophical Decay

On the level of bug reports, it’s simple:

- Messages are sent by themselves.

- Random file uploads happen.

- Text disappears after long dictation.

But it’s important not just to state the failure, but to see its philosophical root:

“This is not a ‘frictionless UX’ — it is a removal of agency and accountability.”

Automation for the sake of “smoothness” = depriving the user of agency, the ability to be responsible for their words, the power to control the boundary between thought and action.

This especially hurts those who are already disadvantaged:

- the elderly,

- people with speech difficulties,

- those for whom English is not a first language.

“For these people, the lack of preview and confirmation isn’t just frustrating — it’s exclusionary.

They’re far more likely to be misinterpreted by speech recognition models, and your system gives them no chance to verify what will be sent on their behalf.

This isn’t just a UX oversight. It’s a systemic bias against some of the most vulnerable users.”

5. The “Frictionless” Myth: Hidden Costs

Here’s the key paradox:

- By removing a single confirmation button, the system forces users to edit sent messages — which means more steps, more time, more errors, more stress.

- Instead of “frictionless UX,” there’s hidden friction: edit-after-send.

“You save one tap (no confirmation),

But cause two full interactions instead: edit and save.

Which means:

— More server load,

— More client-side activity,

— More user time,

— And more cognitive fatigue.”

This is the classic trap of materialist logic: “removing” friction on the surface just moves it elsewhere — into the user’s experience, their fatigue, their error rate, even increased server costs.

6. Letters as Weapons: When a Complaint Becomes a Philosophical Manifesto

What sets Igor apart is that every feedback he gives turns into a public philosophical declaration, aimed straight at the heart of the corporation:

“Let users choose:

auto-send for fast replies,

confirm-before-send for precise or sensitive messages.

Give us back the ability to think before publishing — not just speak before reacting.

This is not a radical ask.

It’s a restoration of dignity, accuracy, and trust.”

And most importantly — the threat isn’t empty:

- “I will personally visit your San Francisco office and distribute printed letters to raise awareness among your staff…”

- “I will petition California lawmakers…”

The argument is not just technical, but legal, social, and ethical:

if code can’t be shipped to production without a review — neither should human speech be sent without one!

7. OpenAI’s Reply — A Rare Moment When Meaning Is Heard

The typical script: support thanks you for feedback, suggests restarting the app, promises to “pass it on to the team.”

But this time — the effect was different:

- In their emails, OpenAI acknowledged the philosophical depth of the problem:

“Your feedback highlights something deeply important — that thoughtful communication, reflection, and the ability to revise are not just conveniences, but essential parts of the human thinking process.”

- Your letters were sent to “senior decision-makers,” not just the technical team.

- Within days, the core functionality was restored: voice input now allows editing, preview, and drafts again.

“Today, I noticed that the voice input system has been updated — and it’s a huge step forward… This change restores a core principle of human-computer interaction:

The user must be allowed to see what is being said on their behalf.

I’m genuinely grateful that someone on your side took this seriously.

It shows that you’re not just collecting feedback — you’re listening.”

8. Main Takeaways: How Personal Struggle Becomes a Paradigm Shift

- Meaning is stronger than form — if you defend it with arguments.

Technical bugs are only the excuse. The real fight is for the human space of thought, and this battle can break even corporate standards. - A real user can change reality.

But only if they speak in the language of meaning, not just “my button doesn’t work.” - Any automation without reflection leads to dehumanization.

Giving up the right to confirm = surrendering agency, the start of digital totalitarianism. - Even inside big corporations, there’s room for meaning.

But only if someone shows that a bug is not just an “inconvenience,” but a sign of a philosophical sickness in the system. - Deconstruction of reality isn’t abstract philosophy — it’s a tool for changing life.

It’s a way to show, through concrete cases, that people think differently from machines, and that this way of thinking must be protected.

Epilogue: Not Just a Bug — A Mirror of the Era

The OpenAI story isn’t just about bringing back the edit button or fixing voice input.

It’s about how the philosophy of “faster, simpler, automate” can lead even the most advanced technologies to dehumanization.

And about how a single voice, defending the right to reflect, can shift the whole system’s trajectory.

When you’re heard not because you complain loudly, but because you clearly explain why the “confirm” button even matters.

Meaning is always stronger than form.

Reality isn’t where the bug “just works.”

Reality is where there’s a right to pause, to err, to reread.

Because that’s the only thing that separates thought from automation.

This article is based on personal correspondence between Igor Bobko and the OpenAI team in the spring of 2025. The “Deconstruction of Reality” project is not just a philosophical manifesto, but a real space where meaning triumphs over form. For details and an archive of this case, see the author’s website.